The EU law brings new rights

The Digital Services Act is a new law by the European Union that aims to reduce digital violence and other online harms. Business networks, gaming platforms or dating services: all online platforms and search engines that allow the spreading of user-generated content must follow new rules within the European Union from 17 February 2024. For the most common social media platforms like Instagram, TikTok or YouTube, these rules will already apply from 25 August 2023. In this guide, we prepare you for exercising your new rights on social media.

You do not need to read this whole guide to get the tools you need. You can just choose the question you’re interested in and click on it.

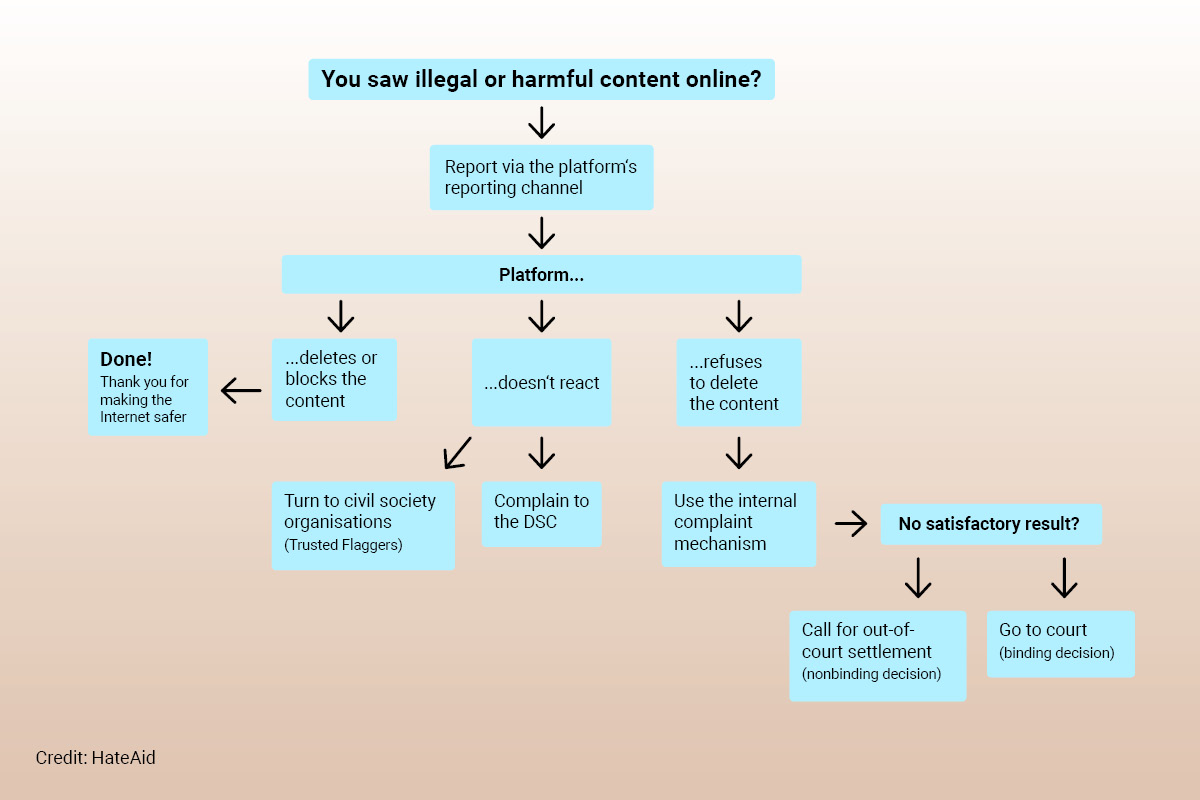

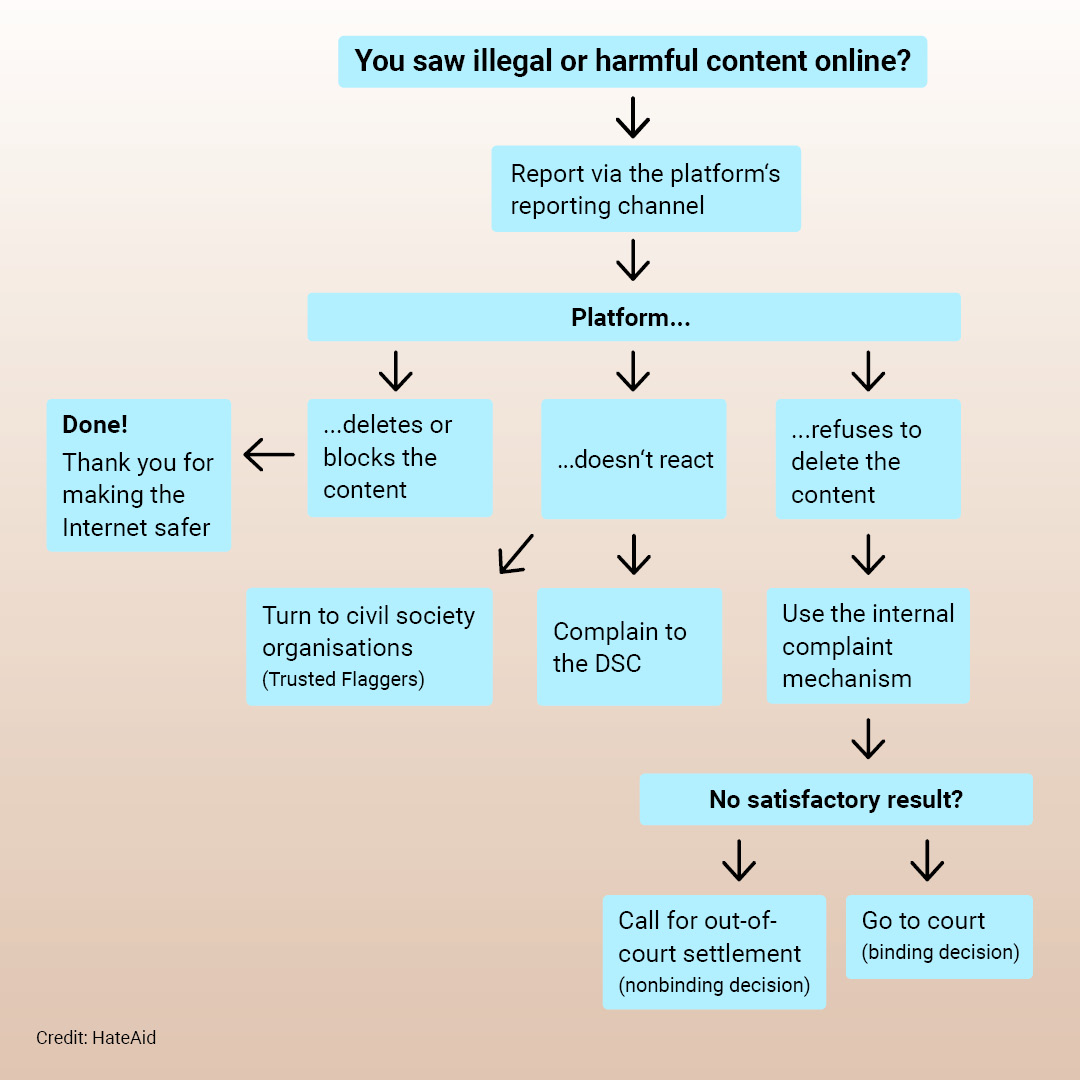

This flow chart guides you through the possibilities you have when reporting hate on social media.

This flow chart guides you through the possibilities you have when reporting hate on social media.

A better internet for everyone

We encourage you to make use of your rights as they can ensure that online platforms are held accountable. This way, you contribute to making the internet a safer place for everyone.

Your questions, our answers on the DSA

Content reporting

The Digital Services Act gives you the opportunity to report content on a platform’s reporting channel. Whenever you see potentially illegal content, no matter if you are personally attacked by it or not, you should start reporting it. This way, you make sure online platforms are held accountable which can lead to making the internet a safer place.

You can report illegal content as well as problematic content that is violating the internal platform rules. The latter can, for example, be cases of disinformation or hatred that platforms claim to not allow based on their terms and conditions.

This is what we recommend:

Feel free to report any content that looks potentially illegal to you as this may be subject to removal according to the terms of service of the online platforms. This also applies to incidents of disinformation that can generally also be reported with the reporting channels offered by all platforms. You don’t have to be sure that the content is illegal as it is not your task to decide that.

Nice to know:

The Digital Services Act primarily refers to illegal content, but it does not specify what kind of content is illegal. Therefore, the definition of illegal content is based on the law of the respective member state. The platform then has to remove the content if it proves to be illegal. It may apply geoblocking, which means that the content is only removed in some member states if it is not illegal everywhere in the EU. Moreover, platforms may not be obliged to apply stricter rules than those applicable in the member state where their headquarters or their legal representative is located.

You can report abusive content directly on the online platforms you are using. All online platforms must provide a reporting channel for users to report potentially illegal content.

You can either provide all your data or stay anonymous: platforms must allow both. You can also report content if you are not a registered user of the platform. In any case, due to data protection rules, the platform is not allowed to reveal your name to the uploader of the content you reported.

Platforms need to inform you regularly no more than 24 hours later. If the response takes much longer or you don’t receive a reply, you can complain to the Digital Services Coordinator.

This is what we recommend:

- Use the platform’s own reporting channel. You could use other ways, like an email to the platform, but this would deprive you of features such as getting a confirmation receipt of your report.

- For anonymous reporting, you can provide a nonpersonalised email address to keep both your privacy and stay informed about how your report is being handled. In case the platform does not allow anonymous reporting, you can complain to the Digital Services Coordinator.

- You can improve the processing of your report by providing the exact URL/place of the content in question and all the context information necessary for a legal assessment of the content.

Nice to know:

- Even though the platform must not reveal your name to the uploader of the content the platform might provide the context of your report to the uploader. This might lead to identification in some cases.

- In rare cases – for example defamation cases – information on your identity might be necessary for the legal assessment of the case. If this is the case your report might get rejected when reported anonymously.

Potentially illegal content can be based on your national law, for example:

Insults, threats, desinformation, sexist comments, racial slurs, intimate images, …

Wrongful blocking

If you think that your profile or content was blocked wrongfully, you should use the internal complaint mechanism offered by the platforms. You can also use the out-of-court settlement mechanism or turn to a court to have the decision overruled.

Unanswered report

You are not powerless even if a platform does not answer your report, takes too long for the answer or rejects your report. You still have different options to request the platform’s reply or to have the platform’s response reviewed. Usually, you have to be a registered user if you want to use these remedies.

1. Use the internal complaint mechanism

You can use the internal complaint mechanism to get a second review of your report of content.

You can initiate the internal complaint mechanism up to 6 months after you have received the information about the rejection of your report. You can submit your complaint electronically and can file this complaint free of charge.

2. Turn to civil society organisations

If your report was not successful, you can turn to specific civil society organisations for support. These organisations are called trusted flaggers and can support you with the reporting by using a privileged reporting channel. Trusted flagger organisations are assigned by the Digital Services Coordinator of your member state and will be there to help you. A list of trusted flagger organisations will be published by the European Commission in a publicly available database in February 2024.

HateAid regularly supports users in reporting illegal and harmful content. As an organisation specialised in tackling online violence we can help. Feel free to get in touch with us via legal@hateaid.org.

3. Call for out-of-court settlement

For an independent assessment of your case you can seek an out-of-court settlement. An out-of-court settlement can be arranged by an independent institution, for example a nonprofit organisation or a law firm. The European Commission will publish a list of out-of-court dispute settlement institutions on their website. The procedure should not take longer than 90 days.

4. Complain to the Digital Services Coordinator

You can complain to the Digital Services Coordinator whenever you feel that you witnessed or even experienced any violation of the Digital Services Act.

This is what we recommend:

- Choose a body that operates in a language that you know. You can use any out-of-court settlement institution, irrespective of the member state where it is located. Still, these institutions don’t have to offer their services in all of the official languages of the EU.

- The out-of-court settlement does not only apply the law of any member state but can also consider other rules, like codes of conduct.

Nice to know:

- The decision of the out-of-court settlement body is not binding for the platforms.

- The out-of-court settlement institution self-declares rules of procedure.

- Costs might occur if you lose your case. These costs include your own expenses (for legal counselling etc.) and possibly a small additional fee. If you win, the platform has to pay the costs of the procedures as well as costs for legal representation that may have occurred.

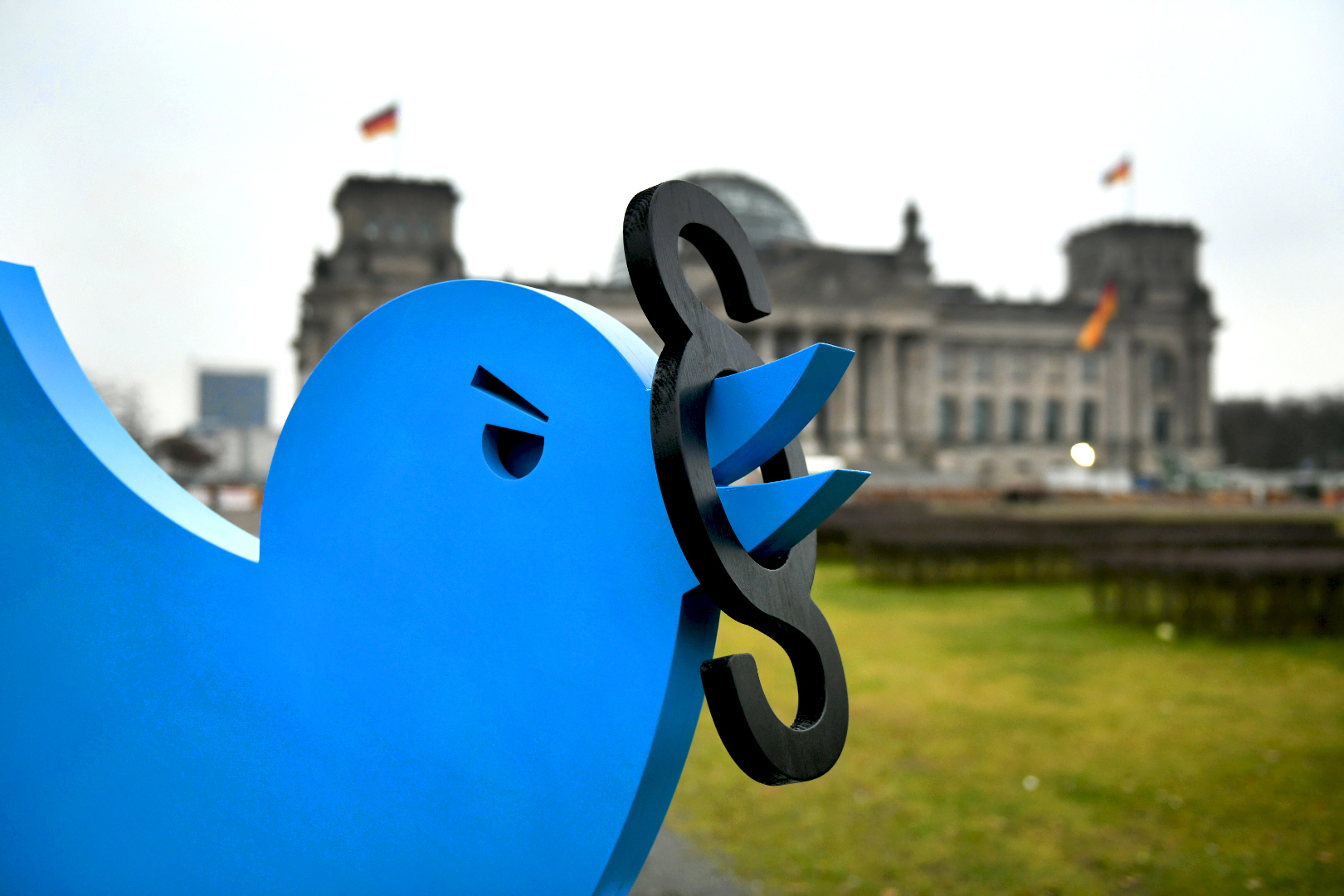

X (formerly Twitter) is among the very large online platforms to which the DSA applies as of 25 August. Photo: HateAid

Go to court

Yes, you can claim your rights turning to a court. The new rules of the Digital Services Act do not change that. But: if your issue is about the removal of illegal content, you need to report the abusive content to the platform before turning to court.

It is no precondition or advantage having sought an out-of-court settlement or having used other complaint options before you go to court. It will also bring no disadvantage if you have used complaint options or not. If an attack against you qualifies as illegal content and the law of your member state allows it, you can turn to the competent court anytime.

Considering the cost risk of court proceedings and the fact that out-of-court mechanisms are an additional means to lower thresholds, it makes sense to start the out-of-court settlement first and decide after that if you still want to turn to court.

Nice to know:

- Usually, court proceedings cause a cost risk that varies from country to country.

- A lawsuit has to be filed in one of the official languages of your member state. Additional costs may occur for translation of documents to make them accessible if a platform has its headquarters in a member state with another official language than your member state.

- For considering which content is illegal and therefore subject to a lawsuit, the law of your member state is relevant.

- In most jurisdictions, as a user you can only turn to a court for instances that personally affect your rights. For cases of general violations of your rights based on the Digital Services Act you should consider an official complaint to the competent authorities (usually this will be the Digital Services Coordinator).

For your help: The Digital Services Coordinator

The Digital Services Coordinator is in charge of the coordination of platform oversight on member state level. This institution is responsible for investigating violations of the Digital Services Act and for drawing consequences. It can also place orders to end violation or sanction platforms.

Each member state has one or more competent authorities for oversight of the Digital Services Act. One of these authorities is the Digital Services Coordinator. These institutions will be designated by February 2024 at the latest.

The power of the Digital Services Coordinator depends on the platforms that you are complaining about. Very large online platforms are overseen by the European Commission. Therefore, the Digital Services Coordinator has more influence on the smaller platforms.

Examples for very large online platforms:

Instagram, X (formerly Twitter), TikTok, LinkedIn, Youtube

Examples for smaller platforms:

Telegram, Tinder, Twitch, Discord, Mastodon, Pornhub, Etsy, reddit

For a complaint, you must turn to the Digital Services Coordinator of the member state where you are either located, even if only temporarily, or where you are officially established. You don’t have to be a registered user of the platform that you are complaining about.

You should be able to file a complaint online, providing URLs or screenshot, but also the analogue, written way or even oral complaints should be possible.

After a complaint has been filed, you can make use of your right to be heard and ask for appropriate information about the status of the complaint. That means the Digital Services Coordinator has to provide a case number and confirmation. If this is not happening in your member state, please get in touch with us via legal@hateaid.org.

Possible results of your complaint can be:

A) The Digital Services Coordinator can approve your complaint and make orders or impose fines on the platforms.

B) If the Digital Services Coordinator you complained to is not responsible, it will forward your complaint to the competent Digital Services Coordinator in the member state where the platform has its headquarters or to the European Commission.

C) The Digital Services Coordinator can only refrain from forwarding the complaint in cases where it is manifestly inadmissible.

This is what we recommend:

We encourage you to file a complaint if a platform disregards your rights. The Digital Services Coordinator relies on users to shed a light on grievances of online platforms because the institution cannot monitor all platforms at the same time.

What you can personally ask the Digital Services Coordinator for is to accept, assess and process your complaint against an online platform according to the law. But: the Digital Services Coordinator is not your personal lawyer in charge of bringing you justice.

The Digital Services Act obliges platforms to give you tools to report digital violence and file complaints. These tools, such as the reporting channels, points of contact for users and complaint mechanisms, must be user-friendly and easy to access.

This means:

- Easy to understand:

- The tools must be available in all the official languages of the member states where the platform is operating. If the platform is available in the member state that you live in, it has to support the official languages of the EU.

- The tools must be easy to understand, without legally, technically or otherwise difficult information.

- Easy to find:

- The tools must be situated close to the content, so you don’t have to spend time searching for it.

- Easy to handle:

- The tools must allow you to provide the information necessary to assess the report by providing the exact place of the incriminating content (by either directly referring to it or including a URL) and giving reasons. This needs to be possible in the browser as well as in applications on smartphones or tablet devices.

- Rapid response:

- You should not have to wait for a long time for the platform’s response stating their decision and options for remedies. How much time they have exactly is still to be determined. Usually this should not take more than 24 hours. You can also use the internal complaint mechanism if this is the case.

- Confirmed communication:

- The platform must provide confirmation that you sent the request, so that you are able to prove that you raised your issue.

- Human communication:

- The contact point that platforms have to provide must not exclusively rely on automated communication tools such as chatbots. You have the right to communicate with an actual human being. Most likely the platforms will provide an email address or contact form to get in touch with them.

When your account or content was wrongfully blocked, the platforms must provide you with a statement of reasons. The statement of reasons has to include:

- sort and extent of the measures taken,

- the facts and circumstances that lead to the decision, esp. if the alleged violation was found through a user notice or proactive investigation

- whether automated means were used,

- when applicable

- the legal grounds with an explanation,

- the sections of the terms of service that were violated, with an explanation.

You can complain to the Digital Services Coordinator whenever you feel that your rights of user-friendly reporting and complaint mechanisms are disregarded.

There will be no negative consequences for you if you report a complaint based on true facts and it turns out not to be a violation. It is not your responsibility as a user to conduct a legal assessment or provide all the evidence.

Contact online platforms

Yes, you can get in touch with the platforms directly. Platforms have to assign and provide an easy-to-access electronic contact point. That means you should not have to search for it. The contact point must be somewhere close to the contact section of each online platform.

Nice to know:

You cannot count on the electronic point of contact to deliver documents that are meant to prepare or conduct a lawsuit in a legally secure way. Most member states do not have contracts with online platforms about the electronic delivery of documents.

You have a question that this guide does not answer? Let us know what information you are missing. We want to provide you with the tools you need to claim your rights. Therefore, we’d be happy to hear from you and to extend this guide.