The Judgement in the case Künast vs. Facebook

What does it mean for the Digital Services Act?

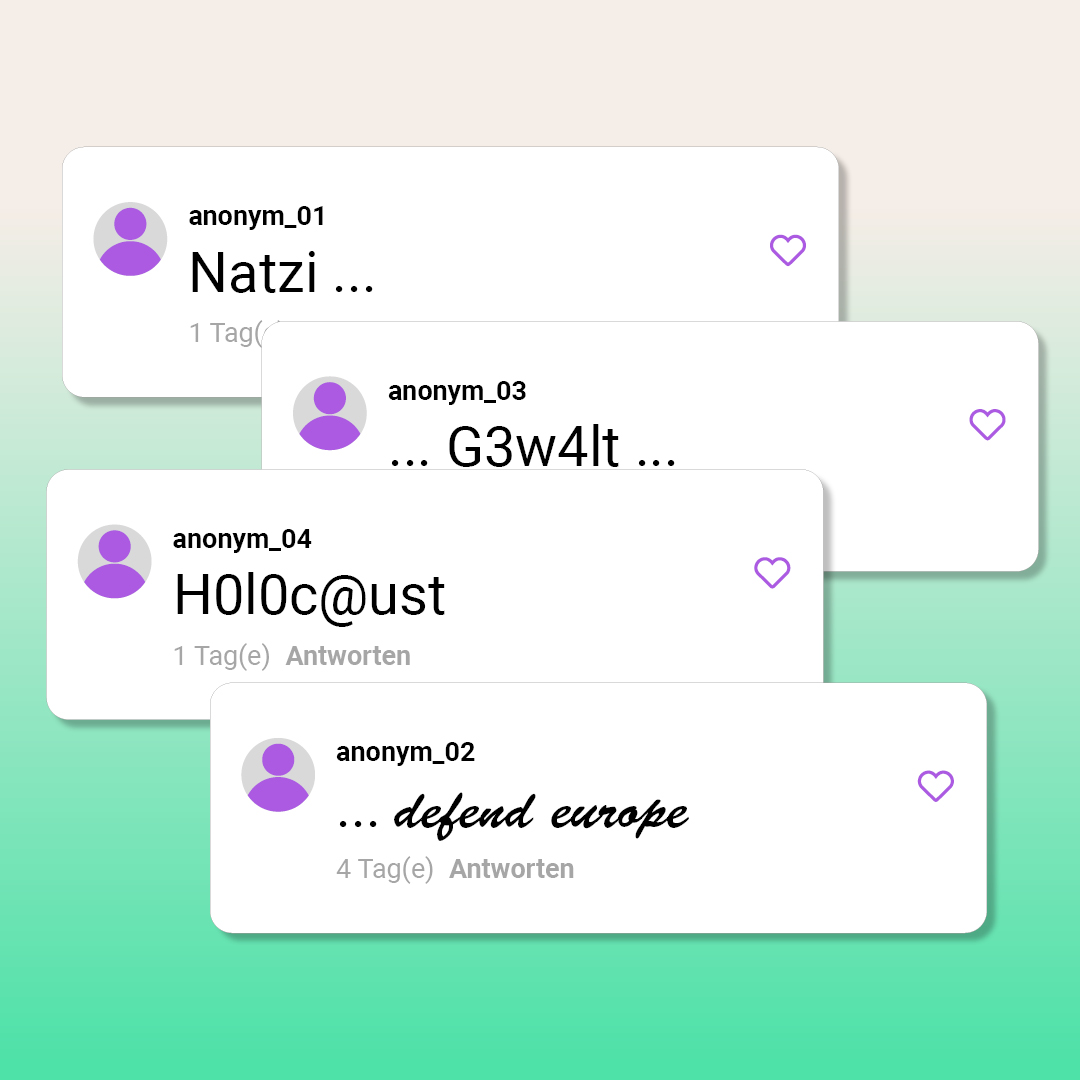

For 7 long years, a meme with a false quote by a member of the German Bundestag Renate Künast circulated on Facebook and was shared widely among its users, including from far-right accounts. Now the district court of Frankfurt am Main (Case 2-03 O 188/21) in a landmark ruling published on the 8th of April 2022 found that Facebook is obliged to delete all posts containing the misquote. The judgement is a huge success which will have an impact far beyond the individual case of MP Renate Künast and should also be read with great attention by the EU institutions when negotiating the Digital Services Act (DSA).

The Court obliges Facebook to identify and delete all identical and similar posts

The court ruled that Facebook is obliged to identify and delete all identical and similar posts containing the misquote which are accessible on its platform at the time of judgement.

Although the platform is not and cannot be obliged to generally monitor all uploaded content, it, however, can be held liable as soon as it obtains actual knowledge of a specific illegal content and does not take immediate action to delete it or restrict access to it. The court defines similar content in the case as one that creates the impression that the quoted statement originates from the claimant. Contrary, the content is no longer seen as similar if it mentions that it is a false quote, and therefore does not create an impression that it originates from the claimant.

The verdict also states that as soon as MP Renate Künast reported the specific post, it was Facebook’s obligation, both technically and economically, to identify and delete other identical and similar posts even without a specific URL.

The Frankfurt Regional Court also awarded MP Renate Künast damages for personal suffering of 10,000 euros, deciding that Facebook was responsible for the violation of personality rights and did not act upon obtaining actual knowledge.

No upload filters are required or imposed: Decision should be made by human content moderators

The court expressly rejected Facebook’s claim that the use of so-called upload filters would be necessary for purposes to identify and delete the content in question.

Instead, Facebook is required to use human content moderators to decide if the post constitutes a violation of the claimant’s personality rights.

This is a very important part of the judgement, pointing at platforms’ responsibility to have sufficient human resources deployed in content moderation at the time when platforms tend to invest more and more in automated systems to do this job.

What does the judgement mean for the Digital Services Act?

The judgement is not only a huge step forward for those affected by mass hate crimes on online platforms but should also have an impact on the current negotiations on the DSA. The currently negotiated proposal for Art. 7 DSA transfers Art. 15(1) e-commerce Directive (so called ‘no general monitoring’ rule) into the DSA, but brings slight changes to its wording which could threaten the positive developments seen in the existing case law.

The wording of Art. 15(1) e-commerce provides a good foundation for courts to deal with individual cases. The provision makes it possible to strike a balance between the conflicting interests of the parties and the prevention of a general monitoring obligation. The judgement in the Künast case is a very good example for this. The court was very clear that there is no need for the use of upload filters but did instead point out that human oversight is essential for adequately dealing with illegal content on platforms.

Effective Measures are needed

The existing case law shows that Art. 15(1) e-commerce Directive is a success story. The wording of the provision allows courts, both on a national and European level, to protect platforms from disproportionate filter-obligations as well as demand effective measures for individuals against specific items illegal content on the internet.

Art. 15(1) e-commerce Directive should therefore be transferred to the DSA without any changes to remain the status quo. Even slight differences or amendments would possibly create legal uncertainty, especially regarding the existing case law. The provision sets clear limits for all measures of rights enforcement and leaves the law enforcement a sufficient flexibility for adequate measures in specific cases.

Furthermore, the European Parliament’s proposal for Art. 7(1a) DSA goes even beyond those changes and wants to restrict courts from imposing any obligation that includes the use of automated tools for content moderation or for monitoring at all of content even if it is specified and therefore creates no “general” obligation. This points the way in a completely wrong direction. The good and concise development of Art. 15(1) e-commerce Directive by the courts is fundamentally put into question by this far-reaching amendment and should therefore not be part of the DSA.

Overview of the case Künast vs. Facebook

The judges have ruled in favour of us on all points: What exactly happened in court during the last years? And what does it mean for all victims of digital violence?