Image-Based Sexual Abuse, Porn Platforms and the Digital Services Act

In December, The European Parliament’s IMCO committee adopted a report on the Digital Services Act, the EU’s internet law in-making. Among other additions, the committee proposed to introduce requirements for user-generated pornographic content. They want to tackle image-based sexual abuse on porn platforms – a growing problem across Europe. HateAid has turned to experts in the fields of internet law and image-based sexual abuse to assess these proposals.

Therefore, Professor Clare McGlynn, Durham University, and Professor Lorna Woods, University of Essex, based on a commission by HateAid, have authored the expert opinion “Image-Based Sexual Abuse, Porn Platforms and the Digital Services Act”.

Their 4 central findings were:

- Non-consensual content on porn-platforms is easily accessible, often even on the front pages of the most popular porn platforms.

- Victims of image-based sexual abuse report life-shattering and life-threatening harms.

- Regulations for porn platforms are needed to help the victims.

- DSA can guarantee minimum standards to protect from image-based sexual abuse.

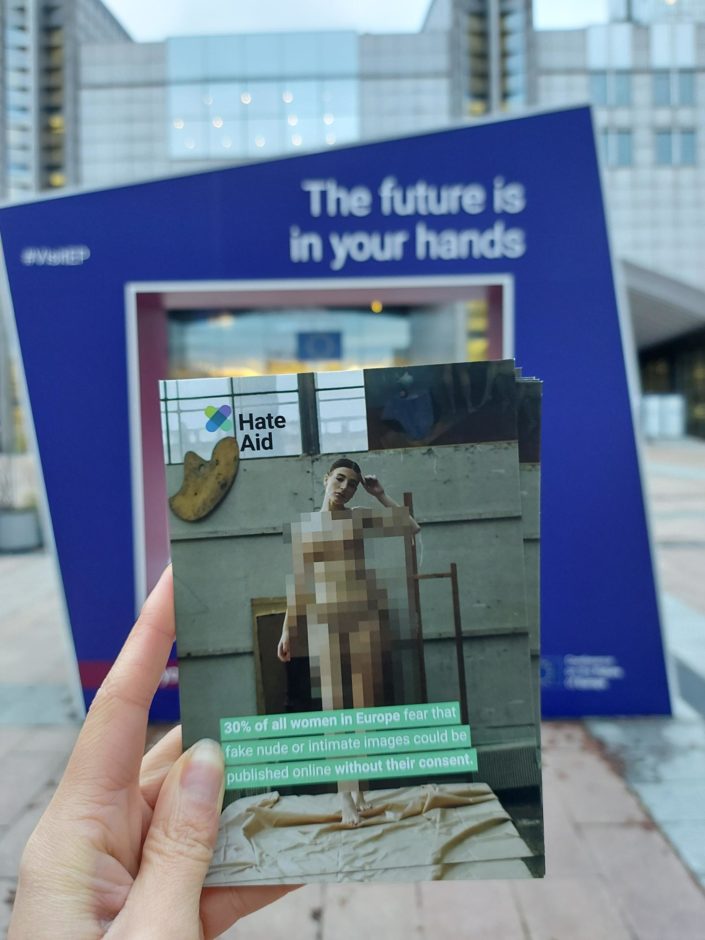

Fear of intimate pictures leaked online

Professor McGlynn and Professor Woods reveal that the data is scarce, but the glimpses that are available foreshadow devastating conditions. In 2020, over 100,000 images of Irish women and girls were leaked online, with similar websites uncovered in Italy with thousands of users sharing sexual images without consent. And even worse: Many women do not even know that their images have been taken or shared without their consent.

The consequences of image-based violence are severe. Victims whose images have been published on porn platforms without consent report life-shattering and life-threatening harms. Research shows that non-consensual content on porn-platforms is easily accessible, often even on the front pages of the most popular porn platforms.

„When the videos appeared on PornHub it ruined my life, it killed my personality, it zapped the happiness out of me. It brought me almost two years of shame, depression, anxiety, horrifying thoughts, public embarrassment and scars.”

Source: ITV

Regulations are needed to help the victims

Porn Platforms must be obliged to take action against this common abuse, the researchers state. The missing self-regulation by porn companies is failing victims. Furthermore, the availability of image-based sexual abuse material on porn sites legitimises and normalises abuse. Regulations would not just prevent abuse but also reduce the harms for thousands of victims.

The researches assess the amendment introducing new Article 24b DSA, that is part of the IMCO DSA report, as minimum standards to protect from image-based sexual abuse. They also assess that the proposed provision is likely human rights compliant. Provision recognises significance of victims’ privacy rights, a State’s obligation to protect psychological integrity and that online abuse restricts women’s freedom of expression. Factors that weigh heavily in any balancing exercise.

The suggested user verification processes would assist law enforcement and likely reduce non-consensual content disseminated. The provisions for trained content moderation assists identification of non-consensual imagery and could strengthen notification procedures and take-down of harmful content.